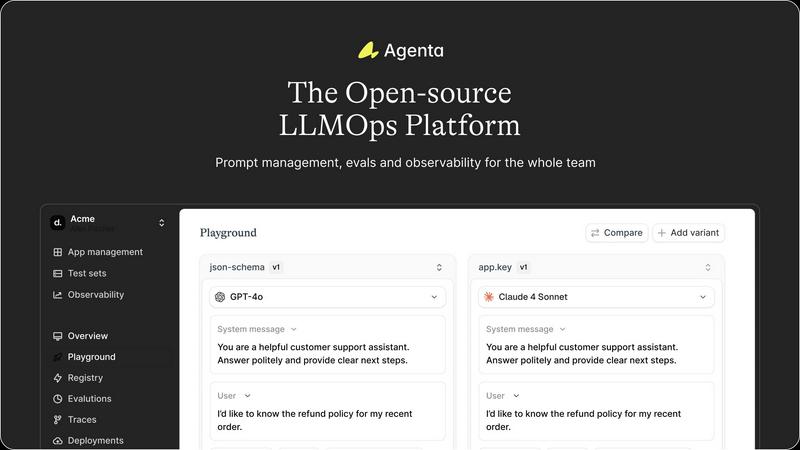

Agenta

Agenta is the open-source LLMOps platform that empowers teams to build reliable AI apps together.

Visit

About Agenta

Agenta is the transformative, open-source LLMOps platform engineered to empower AI teams to build and ship reliable, production-grade LLM applications with unprecedented speed and confidence. It directly confronts the core chaos of modern LLM development, where unpredictable model behavior meets scattered workflows, siloed teams, and a critical lack of validation. Agenta shatters these barriers by providing a unified, collaborative environment where developers, product managers, and domain experts converge to experiment, evaluate, and observe their AI systems. Its game-changing value proposition lies in centralizing the entire LLM development lifecycle—from prompt ideation and model comparison to automated evaluation and production debugging—into a single source of truth. By replacing guesswork with evidence and silos with synergy, Agenta unlocks the potential for teams to systematically iterate, validate every change, and swiftly pinpoint issues, ultimately transforming how reliable AI products are delivered.

Features of Agenta

Unified Experimentation Playground

Agenta's unified playground is a collaborative hub that allows teams to experiment with prompts and models side-by-side in real-time. It provides a model-agnostic interface, freeing you from vendor lock-in and enabling direct comparison of outputs from different providers like OpenAI, Anthropic, or open-source models. With complete version history for every prompt iteration, teams can track changes, revert if needed, and debug issues using real production data, turning every error into a learning opportunity for rapid improvement.

Automated & Flexible Evaluation Framework

This feature replaces subjective guesswork with a rigorous, evidence-based evaluation process. Agenta allows you to create a systematic testing pipeline using LLM-as-a-judge, built-in metrics, or your own custom code as evaluators. Crucially, you can evaluate the full trace of complex agentic workflows, testing each intermediate reasoning step, not just the final output. This enables teams to validate every change before deployment and integrate human feedback from domain experts directly into the evaluation workflow for comprehensive assessment.

Production Observability & Debugging

Agenta delivers deep visibility into your live AI applications by tracing every single request. This allows teams to find the exact failure points in complex chains or agentic reasoning. Any production trace can be instantly annotated by your team or end-users and converted into a test case with a single click, creating a powerful feedback loop. Furthermore, live online evaluations monitor performance continuously, helping detect regressions and ensuring system reliability post-deployment.

Cross-Functional Collaboration Tools

Designed to break down silos, Agenta brings product managers, developers, and subject matter experts into a single, cohesive workflow. It provides a safe, intuitive UI for domain experts to edit and experiment with prompts without writing code. Product managers can run evaluations and compare experiment results directly from the interface. With full parity between its API and UI, Agenta seamlessly integrates programmatic development with collaborative oversight, making it the central hub for all stakeholders.

Use Cases of Agenta

Streamlining Enterprise Chatbot Development

AI teams building customer-facing or internal support chatbots use Agenta to manage countless prompt variations for different intents and tones. Product managers and customer experience experts collaborate with engineers in the playground to refine responses. Automated evaluations against quality and safety benchmarks ensure each new prompt version is an improvement before being rolled out to thousands of users, significantly reducing deployment risk.

Building and Validating Complex AI Agents

For teams developing sophisticated multi-step AI agents (e.g., for research, data analysis, or workflow automation), Agenta is indispensable. Developers can trace and debug the agent's entire reasoning chain when it fails. They evaluate each step's output using custom logic, ensuring reliability. This systematic validation is critical for moving beyond prototypes to robust, trustworthy agentic systems ready for production environments.

Managing Rapid Prompt Iteration for A/B Testing

Marketing and product teams running A/B tests on AI-generated content (like ad copy, email subject lines, or product descriptions) use Agenta to centralize all prompt experiments. They can compare outputs from different models, run bulk evaluations using specific success criteria, and maintain a clear version history of what was deployed. This brings data-driven rigor to creative processes and accelerates optimization cycles.

Academic and Research Team Collaboration

Research teams and academic labs working on LLM benchmarks, novel prompting techniques, or reproducibility studies utilize Agenta's open-source platform to collaborate on experiments. They can version-control their prompting methodologies, run consistent automated evaluations across model updates, and share observable traces of model behavior, making their research more structured, collaborative, and transparent.

Frequently Asked Questions

Is Agenta really open-source?

Yes, Agenta is a fully open-source LLMOps platform. You can dive into the codebase on GitHub, self-host the platform on your own infrastructure, and contribute to its development. This ensures transparency, avoids vendor lock-in, and allows for deep customization to fit specific enterprise needs and security requirements.

How does Agenta integrate with existing AI frameworks?

Agenta is designed for seamless integration with the modern AI stack. It works natively with popular frameworks like LangChain and LlamaIndex, and is model-agnostic, supporting APIs from OpenAI, Anthropic, Cohere, and open-source models. You can integrate Agenta's evaluation and observability features into your existing applications with minimal disruption.

Can non-technical team members really use Agenta effectively?

Absolutely. A core design principle of Agenta is to democratize LLM development. It provides an intuitive web UI that allows product managers, domain experts, and other non-coders to safely edit prompts, run comparative experiments, and review evaluation results without needing to write or understand code, fostering true cross-functional collaboration.

What makes Agenta different from just using a spreadsheet and separate monitoring tools?

Agenta eliminates the chaos of scattered tools by providing an integrated, version-controlled system. Unlike spreadsheets, it offers direct experimentation, automated evaluation pipelines, and links every production trace back to its exact prompt version. This creates a closed feedback loop that is impossible with disconnected tools, transforming ad-hoc workflows into a structured, reliable engineering process.

Pricing of Agenta

As an open-source platform, Agenta's core software is freely available for download, self-hosting, and use. The company offers commercial support, enterprise features, and managed cloud services. For detailed information on enterprise pricing tiers, managed hosting options, and support plans, you are encouraged to visit the official Agenta website or "Book a demo" to discuss specific needs with their team.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs